If you were lucky enough to get hold of the Google Voice AIY Kit, then this series of posts will be of interest. They provide details for a voice controlled interface to the home hub, enabling you to get sensor readings and control actuators using voice commands.

I have implemented a system that runs on a separate raspberry pi, and queries the home hub using a new REST interface. Having experimented briefly with the Google software, I did not feel it was easy to adapt to my requirements, so this solution only uses the Google hardware. The software is a mix of components selected for the task. The design goal is to have a system that can work out-of-the-box with any home-hub installation, irrespective of what zones, sensors and actuators are installed.

Part way into this project I realised that testing voice assistants was particularly difficult. Interpreting voice commands is not an exact science, and this causes frustration when you are designing new software. For this reason I have implemented an architecture that aims to make this less problematic.

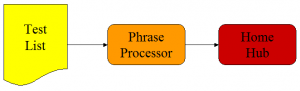

The first stage involves creating a list of the voice commands you want to process. These are fed directly into the phrase processor, and the response is displayed. The list can be updated with alternative phrases that you really want your hub to understand, while the configuration is adjusted to make them understood.

When you have your Phrase Processor suitably equipped to handle all the voice commands, you can move on to stage 2.

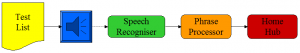

Now the Speech Recogniser is brought into play, and the test list is read out. What we would hope is that our voice commands are still obeyed correctly, but any discrepancies at this stage will be solely down to failure of the Speech Recogniser.

In the next post I will examine the Phrase Processor in more detail.